定时爬取天气到mysql

-

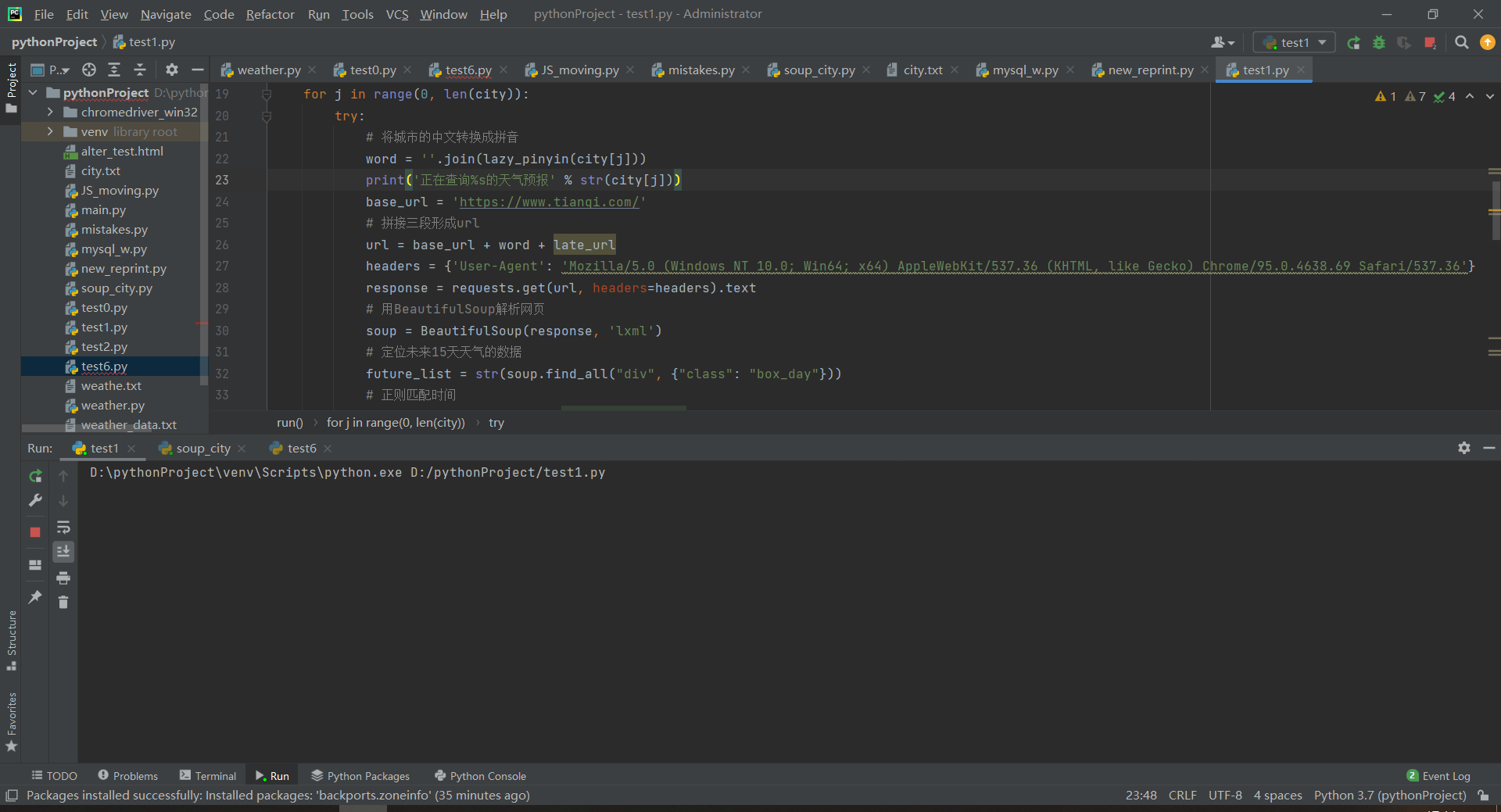

程序不报错,但是也爬不出来东西

import requests import pymysql import datetime import re import pandas as pd import time from pypinyin import lazy_pinyin from bs4 import BeautifulSoup def run(): db = pymysql.connect(host='localhost', user='root', password='789456', db='test', charset='utf8mb4') cursor = db.cursor() sql_insert = 'INSERT INTO new_d(date, city, temp,low,top, quality, wind) ' \ 'VALUES (%s, %s,%s, %s, %s, %s, %s)' #读取需要爬取的城市名单 city = pd.read_excel('D:\city.xls')['城市'] late_url = ['/15'] for j in range(0, len(city)): try: # 将城市的中文转换成拼音 word = ''.join(lazy_pinyin(city[j])) print('正在查询%s的天气预报' % str(city[j])) base_url = 'https://www.tianqi.com/' # 拼接三段形成url url = base_url + word + late_url headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36'} response = requests.get(url, headers=headers).text # 用BeautifulSoup解析网页 soup = BeautifulSoup(response, 'lxml') # 定位未来15天天气的数据 future_list = str(soup.find_all("div", {"class": "box_day"})) # 正则匹配时间 date_list = re.findall(r'<h3><b>(.*?)</b>', future_list) temp_list = re.findall(r'<li class="temp">(.*?)</b>', future_list) quality_list = re.findall(r'空气质量:(.*?)">', future_list) wind_list = re.findall(r'<li>(.*?)</li>', future_list) print(quality_list) for n in range(0, len(date_list)): date = date_list[n] temp = temp_list[n] quality = quality_list[n] wind = wind_list[n] fir_temp_list = temp.split(' ')[0] sec_weather_list = temp.split(' ')[1] # print(sec_weather_list) new_list = sec_weather_list.split('~<b>') low = new_list[0] top = new_list[1] print(date, fir_temp_list, low, top, quality, wind) # print(temp.split(' ~ ')[1]) # print(city[j], today[0], weather, low_temp, top_temp, real_shidu, fengxiang[0], ziwaixian[0]) cursor.execute(sql_insert, (date, city[j], fir_temp_list, low, top, quality, wind)) db.commit() time.sleep(1) except: pass db.close() if __name__ == '__main__': #通过每隔20秒检测一遍是否到达时间 while 1: now_h = datetime.datetime.now().hour now_m = datetime.datetime.now().minute if now_h == 9 and now_m == 30: run() else: time.sleep(2)

这下面是城市名的爬取

import requests from bs4 import BeautifulSoup url = "https://www.tianqi.com/chinacity.html" header = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36"} res = requests.get(url=url, headers=header) html = res.text.encode('iso-8859-1') soup = BeautifulSoup(html, "html.parser", from_encoding="utf-8") # 获取所以的a city = soup.find('div', class_="citybox") a = city.find_all('a') f = open('city.txt', 'w', encoding='utf-8') for di in a: text = di.get_text() print(text) f.write(text + '\n')